At the AI for Good Global Summit, Hao Li, CEO and Co-founder of Pinscreen and Associate Professor at the Muhammad bin Zayed University of Artificial Intelligence, delved into the fascinating world of generative AI, highlighting its transformative impact on visual effects and the broader implications for society. His insights painted a vivid picture of both the incredible potential and the pressing ethical challenges associated with this powerful technology.

The Evolution of CGI and Generative AI

Generative AI, particularly in the field of computer-generated imagery (CGI), has revolutionized the way stories are told in the entertainment industry. Li recalled the profound impact of early CGI in films like Terminator 2, where the liquid metal morphing T-1000 showcased the limitless possibilities of computer graphics. “Basically, what was telling me is that CGI or visual effects was able to do anything that is possible,” Li remarked, setting the stage for a career defined by pushing the boundaries of digital realism.

Films like Avatar epitomize the extent to which CGI has been integrated into modern filmmaking. With over 3,000 shots utilizing visual effects, Avatar demonstrated that almost everything in a film could be created or enhanced digitally.

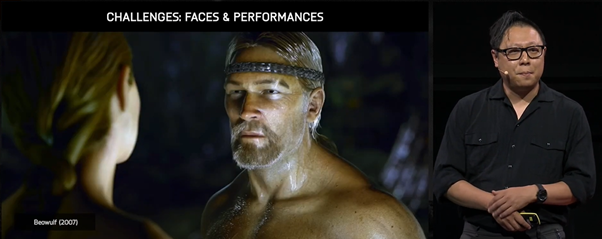

However, achieving realistic human faces has remained a significant challenge due to the “Uncanny Valley” effect. This phenomenon occurs when a digital representation of a human appears almost, but not quite, like a real human, causing discomfort among viewers. “CGI faces were pretty often very creepy and people would avoid them in movies,” Li noted, emphasizing how viewers’ sensitivity to human likeness can make near-realistic CGI characters appear unsettling.

Li further explained the complexity behind this effect. As CGI characters approach photorealism, viewers begin to notice even the smallest discrepancies, such as unnatural movements or slight imperfections in facial features, which can make these characters appear eerie or zombie-like. “Intuitively, you might think that if we add more realism to computer-generated imagery, then you might find it to have more empathy,” Li said. However, the opposite often happens: “Because we get more sensitive to it, we might notice everything that appears very bizarre.”

Advancements and Applications in Facial CGI

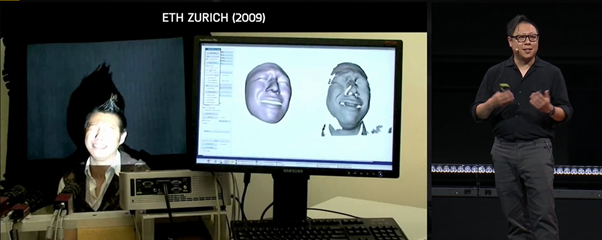

Over the years, technological advancements have significantly improved the realism of CGI faces. Techniques like multi-view stereo systems and photometric stereo systems have enabled high-resolution scans of actors, capturing intricate details down to the pores. Despite these advancements, the process remains complex and costly. “You need a very complex system right; it’s a million-dollar system, it’s hard to deploy, and not to mention the amount of heavy post-processing that happens afterwards,” Li explained.

Li’s work at ETH Zurich and subsequent projects at Weta Digital have been instrumental in breaking new ground of facial tracking and digital human creation. One notable project involved recreating the late Paul Walker’s face for the “Fast & Furious” franchise, showcasing the potential of generative AI in maintaining continuity in filmmaking despite real-world tragedies.

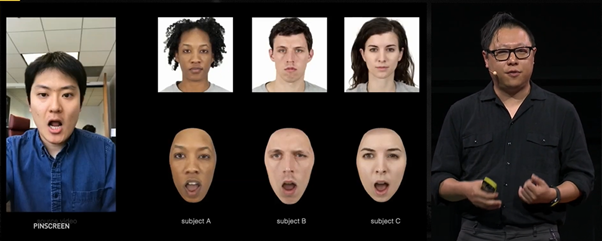

Li also highlighted the role of deep neural networks in advancing facial CGI. “Initially, deep neural networks were modeled using artificial neural networks, basically multi-layer perceptrons,” he explained. The breakthrough came with convolutional neural networks, which consist of multiple layers of linear and nonlinear functions. This technology allowed for detailed analysis of images, significantly enhancing the ability to generate realistic facial expressions. “Instead of using a deep neural network as a classifier, we can actually use it as a regression into different facial blend shapes,” Li said, emphasizing how these advancements have enabled the creation of robust systems capable of generating lifelike images in real time.

These innovations have expanded the applications of generative AI, making it possible to overlay facial expressions from one person onto another in real-time, thereby opening new possibilities in both the entertainment industry and beyond.

Pioneering Realistic Image Creation with Generative Adversarial Networks:

Li highlighted the importance of Generative Adversarial Networks (GANs), particularly StyleGAN, in advancing generative AI, and underscored its transformative potential:

“If you train this network with sufficient data, you can actually generate realistic images of things that never existed […]. This is the crown jewel of generative AI ” he said.

These networks have enabled the creation of highly realistic images and facial expressions, broadening the scope of generative AI applications from entertainment to everyday use.

Li also touched upon other emerging technologies and methodologies within generative AI, emphasizing their combined potential to transform digital creation. However, with these advancements come significant ethical concerns, particularly regarding the misuse of AI-generated content.

Ethical Concerns and the Future of Generative AI

Despite its promising applications, generative AI raises significant ethical concerns, particularly regarding deepfakes. Li pointed out that a large portion of deepfake content is non-consensual pornography, targeting public figures and raising serious privacy issues. Additionally, the potential for deepfakes to be weaponized in fraud and misinformation campaigns poses a significant threat. He exemplified this issue with the story of a financial worker who was tricked into carrying out a $25 million transaction by someone using audio-visual deepfakes in a live conversation. To his knowledge, this was one of the first instances of such technology being used in this way, illustrating the real-world implications of this technology.

“The main problem here is that we now have a technology, as opposed to in the past that were only accessible to visual effects and production studios, that is accessible to everyone,” Li stressed.

To combat these issues, Li emphasized the importance of developing robust deepfake detection technologies and raising public awareness. “Together with the World Economic Forum, we’ve actually developed the first real-time deepfake technology,” he noted, showcasing efforts to stay ahead of potential misuse.

Enhancing Communication and Beyond

Li envisions generative AI playing a crucial role in enhancing communication and creating realistic virtual avatars.

“We’re seeing a lot of things in applications and visual effects, but I think a much bigger impact is if we can use this technology to enhance communication,” Li said.

He highlighted the potential for generative AI to revolutionize virtual interactions by enabling the creation of realistic avatars from a single photograph. This technology, using disentanglement, generates photorealistic avatars that can mimic real-time facial movements and expressions.

Li discussed the broader implications within virtual reality, where combining generative AI with VR headsets can enhance realism in virtual meetings, educational sessions, and social interactions. Additionally, in the entertainment industry, such technology could create digital doubles, perform de-aging effects, and make actors convincingly speak different languages, thus expanding their versatility and reducing the need for traditional dubbing or subtitles.

Li’s presentation underscored that while generative AI offers remarkable advancements in enhancing visual effects and communication, it also necessitates careful consideration of its ethical implications and robust safeguards against misuse.

“AI is really the tool of choice, but at the same time, when we talk about technology that can generate anything, diffusion model-based technology is one of the key challenges,” he explained.

As these technologies become more accessible, the industry must address challenges related to content control and the development of user-friendly interfaces.

Hao Li’s insights at the AI for Good Global Summit serve as both a testament to the remarkable capabilities of generative AI and a call to action for responsible innovation in this rapidly evolving field.