Why is trust in artificial intelligence (AI) important?

Imagine an app that could enable farmers to achieve the most efficient use of water possible, but farmers don’t use the app. It could save money and water, but they don’t use it because they are not familiar with the app’s developer or how the app will use their data.

Imagine two countries competing for leadership in AI. One country announces breakthroughs. The other fears it is falling behind and redirects resources in a bid to catch up, ceasing investment in ethical AI and ‘AI for Good’.

Imagine a medical system able to diagnose a type of skin cancer with 95 per cent accuracy, but it uses an opaque form of machine learning. Doctors can’t explain the system’s decisions. The doctors one day see the system making a mistake that they never would have made. Confidence in the system collapses.

“What these three examples have in common is a breakdown of trust,” explains Stephen Cave, Cambridge University, one of the members of the breakthrough team on ‘trust in AI’ at the 2nd AI for Good Global Summit. “In each of these cases, real opportunities to use AI for good are lost.”

“The Trust Factory will be an incubator for projects to build trust in AI, a community able to host multidisciplinary collaboration.” — Francesca Rossi, IBM Research

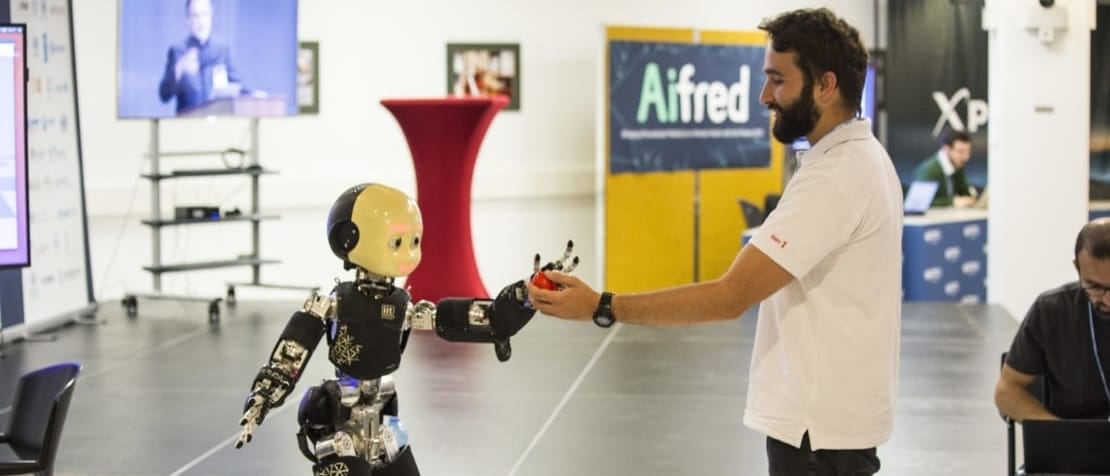

The summit connected AI innovators with public and private-sector decision-makers. Four breakthrough teams – looking at satellite imagery, healthcare, smart cities, and trust in AI – set out to propose AI strategies and supporting projects to advance sustainable development. Teams were guided in this endeavour by an expert audience representing government, industry, academia and civil society. The matchmaking exercise introduced problem owners to solution owners, building collaboration to take promising strategies forward.

The nine projects proposed by the breakthrough team on trust in AI aim address three dimensions of trust elaborated by Stephen Cave:

- AI developers must earn the trust of the stakeholder communities they serve

- We must achieve trust across national, cultural and organizational boundaries

- AI systems themselves must be demonstrably trustworthy

The nine AI for Good projects will be supported by trustfactory.ai to provide other projects with community-oriented enabling infrastructure.

“The Trust Factory will be an incubator for projects to build trust in AI, a community able to host multidisciplinary collaboration,” says Francesca Rossi, IBM Research and University of Padova, one of the leaders of the breakthrough team.

The team behind trustfactory.ai

Team leads: Huw Price, Francesca Rossi, Zoubin Ghahramani, Claire Craig

Team members: Stephen Cave, Kanta Dihal, Adrian Weller, Seán Ó hÉigeartaigh, Jess Whittlestone, Charlotte Stix, Susan Gowans, Jessica Montgomery

Theme managers: Ezinne Nwankwo, Yang Liu, Jess Montgomery

The projects

Proposed projects aim to build trust in AI’s contribution to agriculture and mental health. They will investigate strategies for developing countries to maintain social stability as AI-driven automation influences labour markets. They will explore how the concept of trust varies across cultures, and they will study how policymakers could encourage the development of trustworthy AI systems and datasets free of bias.

Theme A: Stakeholder communities

- Building better care connections: establishing trust networks in AI mental healthcare – Becky Inkster (Department of Psychiatry, Cambridge)

- Assessing and Building Trust in AI for East African Farmers: A Poultry App for Good – Dina Machuve (Nelson Mandela African Institute of Science and Technology and Technical Committee Member for Data Science Africa)

- Building Trust in AI: Mitigating the Effects of AI-induced Automation on Social Stability in Developing Countries & Transition Economies – Irakli Beridze (United Nations Interregional Crime and Justice Research Institute, UNICRI)

Theme B: Developer communities

- Cross-cultural comparisons for trust in AI – LIU Zhe (Peking University, Beijing)

- Global AI Narratives – Kanta Dihal (Leverhulme Centre for the Future of Intelligence, Cambridge)

- Cross-national comparisons of AI development and regulation strategies: the case of autonomous vehicles – David Danks (Carnegie Mellon University, CMU)

Theme C: Trustworthy systems

- Trust in AI for governmental decision-makers – Jess Whittlestone (Leverhulme Centre for the Future of Intelligence, Cambridge)

- Trustworthy data: creating and curating a repository for diverse datasets – Rumman Chowdury (Accenture)

- Cross-cultural perspectives on the meaning of ‘fairness’ in algorithmic decision making – Krishna Gummadi (Max Planck Institute, Saarbrücken) and Adrian Weller (Leverhulme Centre for the Future of Intelligence, Cambridge, and Alan Turing Institute, London)