How can AI expand opportunity while avoiding new divides? During the United Nations General Assembly, a high-level event organized by the International Telecommunication Union (ITU) and hosted by Deloitte explored how to equip societies for the AI era through skills training, ethical governance, and international standards.

The discussion built on the momentum of the AI for Good Global Summit 2025 and the landmark United Nations resolution on AI, bringing together leaders from government, industry, academia, and civil society to align around three priorities: building AI skills, strengthening governance frameworks, and turning principles into practical tools for real-world impact.

Building AI skills for all

Participants stressed that AI’s potential will only be realized if access to skills keeps pace with technological progress. The AI Skills Coalition, launched earlier this year, now includes than 60 partners and over 80 online training resources.

“[The AI Skills Coalition] is a natural partnership with the United Nations, with diverse actors from industry and influential voices, to land and expand and do as much as we can to uplift the species,” said Chris Howard, Executive Vice President and Chief Operating Officer of Arizona State University.

Earlier in the week, during the Digital@UNGA event, a new project was announced to provide AI learning resources for Robotics for Good competitors across five African countries, supported by a $1 million donation from Google.org.

Musician, tech founder, and AI Skills Coalition ambassador will.i.am, linked community robotics programs to durable skills and confidence: for him, robotics is not just about building a robot, but about teamwork, purpose, and belonging.

“It’s about teaching kids what competition is […] and showing these kids that they can be a part of the world of solving problems, applying themselves and building companies,” will.i.am explained.

Speakers highlighted that scaling access requires both reach and relevance. Online courses can reach millions of learners, but local partnerships remain essential to adapt training to specific community needs.

“We want to do things at scale, but that scale includes a whole consortia of partners that we bring together to take it farther and do even more with it,” noted Maggie Johnson, Vice President and Global Head of Google.org.

Others underlined the urgency of connecting skills development with employment pathways. By 2030, over a billion young people will enter the workforce, yet current projections show a shortfall of hundreds of millions of jobs. If deployed responsibly, AI could help close this gap, but only if education systems and employers align around the skills most needed for the future.

“We need to make sure that we’re delivering learning in a way that meets the needs of the young people that we’re servicing and helps them to take that, and forge new careers,” Tom Kaye, Senior Advisor Global Programmes at UNICEF’s Generation Unlimited, explained. “Learning is not an end unto itself.”

Others also cautioned against overconfidence in predicting which skills will matter most. As one participant noted, technologies evolve quickly: what seems essential today may be automated tomorrow. Preparing for this uncertainty, they argued, requires humility as well as investment in education that helps people adapt to change rather than chase static skill sets.

“Let’s make sure we never build replacements for humanity. We need AI tools for solving real problems. You want to cure cancer? There is no shortage of different types of cancer you can work on.” urged Roman Yampolskiy, faculty member in the department of Computer Science and Engineering at the University of Louisville.

The message was clear: access, agency, and ethics must guide AI skilling, so technology expands opportunity instead of leaving people behind.

Strengthening AI governance and standards

The conversation then turned to governance and safeguards, examining how international coordination can ensure that AI develops in ways that are transparent, inclusive, and aligned with human rights.

For instance, law enforcement agencies, participants noted, increasingly rely on AI to process vast volumes of data, even as criminals weaponize the same technologies.

“The key question here is to ensure that they will use it in a responsible and human rights compliant manner,” explained Irakli Beridze, Head of the Centre for Artificial Intelligence and Robotics at the United Nations Interregional Crime and Justice Research Institute (UNICRI).

Ensuring responsible and rights-compliant use requires concrete mechanisms, not just principles. Examples included governance toolkits for law enforcement now being piloted in multiple countries and global forums tracking the rapid growth of AI applications since 2017.

Yet many governments still lack national AI strategies, unequal access and fragmented regulation. Participants urged policymakers to prioritize strategy, literacy, and capacity building so that innovation benefits all regions rather than concentrating in a few.

Discussions also explored the surge in AI-generated misinformation affecting journalists, human rights defenders, and public trust. Emerging practices, such as authenticity requirements for digital content in California, provisions on synthetic media in the EU AI Act, and devices that authenticate both capture and AI edits, were cited as early models for combining innovation with accountability.

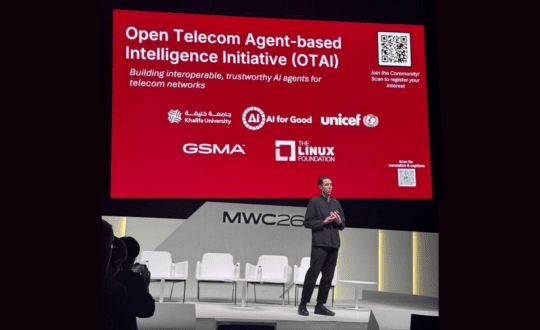

Throughout the session, it was argued that standards play a critical role in turning high-level principles into practical, interoperable solutions. The ITU, together with partners ISO and IEC, has already coordinated work on hundreds of AI-related standards, from deepfakes to energy management, and within a year of the UN Global Digital Compact’s call for action, the International AI Standards Exchange was launched to accelerate collaboration across borders.

Finally, participants highlighted the need to close the global connectivity gap:

“A third of the world is unconnected right now, that’s really preventing from all these use cases from scaling globally,” noted Frederic Werner, Chief of Strategy and Operations, AI for Good, ITU.

Expanding connectivity, they argued, is essential not only for economic opportunity but also for ensuring that global standards reflect diverse perspectives rather than the priorities of the most technologically advanced nations alone.

A call for collaboration

Across both panels, a consistent theme emerged: AI’s benefits will only be realized if education, governance, and implementation advance together. Building AI skills requires not only technical training but also ethics and human-centered design. Governance frameworks and international standards must keep pace with innovation, ensuring trust, transparency, and inclusivity.

And real-world impact depends on moving beyond declarations toward practical tools, national strategies, and programs that deliver measurable results. The session underscored that collaboration across sectors and borders is essential to ensure AI serves all of humanity, not just the most connected or technologically advanced regions.

Register here

Register here