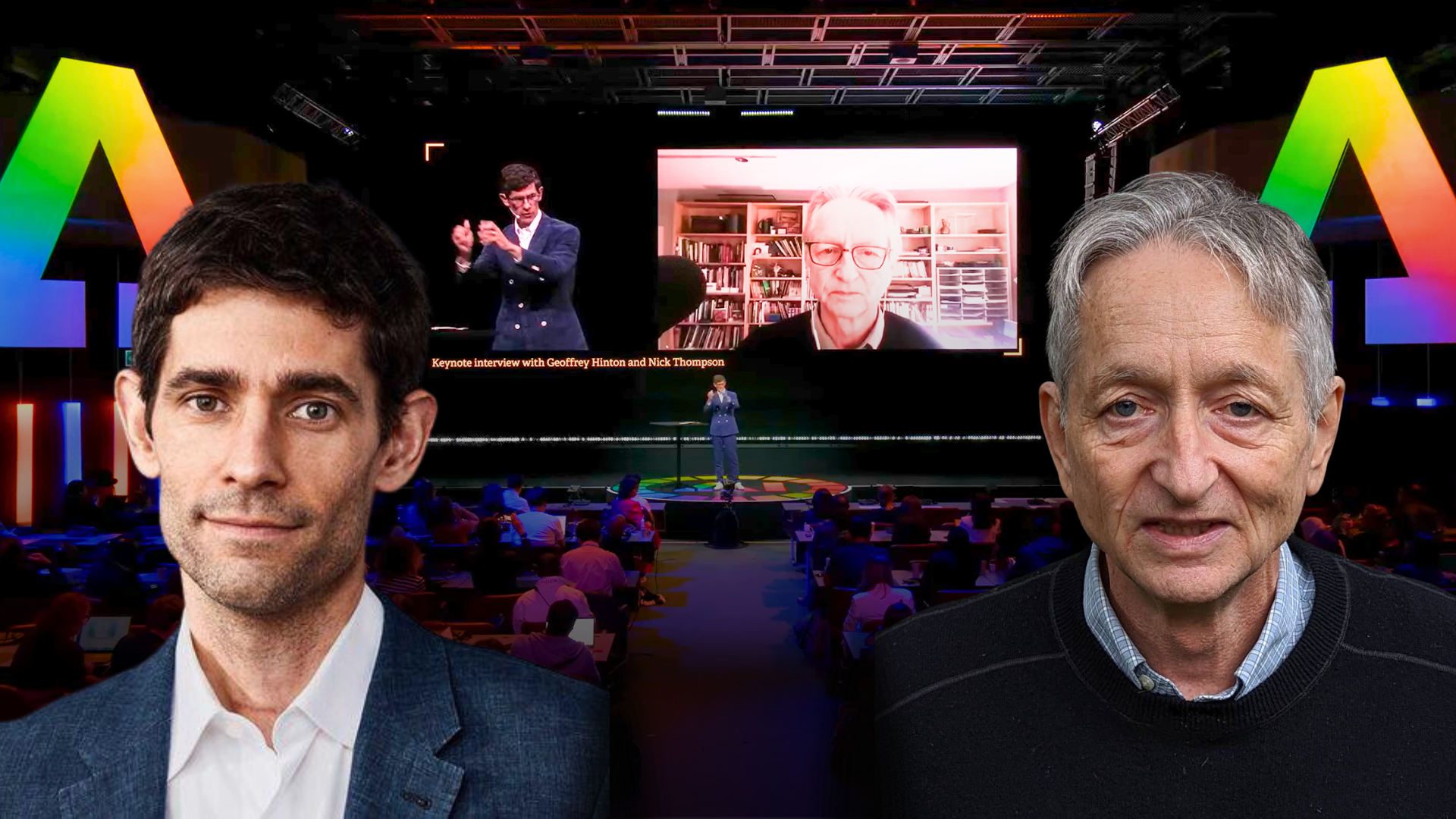

At the AI for Good Global Summit in Geneva, one of the most highly anticipated sessions featured Geoffrey Hinton, a pioneering figure in the field of AI, interviewed by Nicholas Thompson, CEO of the Atlantic. Hinton, known for his groundbreaking contributions to AI, joined the stage to discuss the profound impacts and future of AI. The session began with a warm introduction, acknowledging Hinton’s influence and his reputation as a kind and brilliant mind in AI.

Reflecting on a personal anecdote from a year prior, Hinton humorously advised that plumbing might be a more lasting profession than many others, highlighting AI’s current limitations in physical manipulation. This light-hearted exchange set the stage for a deep dive into Hinton’s background and the evolution of his ideas about AI.

Hinton recounted his early realization that modeling AI on the human brain’s architecture could lead to powerful computing systems. Despite initial skepticism from the scientific community, this insight eventually gained recognition and paved the way for significant advancements in AI. Hinton’s journey included receiving the Turing Award and his influential work at Google, where he further developed his ideas.

A pivotal moment came in early 2023 when Hinton became acutely aware of the existential threats posed by AI. He decided to retire from Google to speak freely about these concerns. This decision was partly influenced by his work on analog computers and the realization that digital computation offers unique advantages, such as the ability to create exact copies of models that can efficiently share data and learn at an unprecedented scale. This capability, Hinton noted, allows AI systems like GPT-4 to accumulate knowledge far beyond human capacity.

“Up until that point, I’d spent 50 years thinking that if we could only make it more like the brain, it will be better,” Hinton explained. “I finally realized at the beginning of 2023 that it has something the brain can never have because it’s digital—you can make many copies of the same model that work in exactly the same way.”

The conversation then shifted to a comparison with his peers, Yann LeCun and Yoshua Bengio, highlighting differing views on AI’s potential and risks. Hinton emphasized that while some see AI as relatively easy to control, he believes in its profound capabilities and potential dangers.

“I think it really is intelligent already, and Yann thinks a cat’s more intelligent,” Hinton said, underscoring the differences in their perspectives.

One of the most intriguing topics was the nature of AI intelligence and whether it could replicate or surpass human cognitive abilities. Hinton argued that AI could indeed match and exceed human capabilities, including aspects often considered uniquely human, such as creativity and subjective experience. He proposed that AI systems might already possess a form of subjective experience, challenging conventional views of consciousness.

“My view is that almost everybody has a completely wrong model of what the mind is,” Hinton asserted.

The discussion moved to the challenges of understanding AI systems’ inner workings. Hinton explained that the complexity and interdependence of numerous weak regularities within AI models make it difficult to interpret their decisions. He acknowledged efforts like those of Anthropic in analyzing AI models but suggested that training AI on empathetic data might be more effective than manually adjusting weights.

Hinton also explored the potential benefits of AI, particularly in healthcare. He predicted that AI would eventually surpass human clinicians in interpreting medical images and integrating vast amounts of patient data to provide superior medical care. He also highlighted AI’s potential in scientific research, such as drug discovery and understanding complex biological systems.

“It’s going to be much better at interpreting medical images,” Hinton said. “In 2016, I said that by 2021 it will be much better than clinicians at interpreting medical images, and I was wrong. It’s going to take another five to ten years.”

However, Hinton expressed concerns about the distribution of AI’s benefits. He warned that while AI could significantly increase productivity, the resulting wealth might exacerbate economic inequalities unless regulated appropriately. He advocated for measures like universal basic income and emphasized the need for robust regulation to ensure AI’s positive impact on society.

“We live in a capitalist system, and the capitalist systems have delivered a lot for us, but we know some things about capitalist systems,” Hinton explained. “In their attempts to make profits, they don’t screw up the environment, for example. We clearly need that for AI, and we’re not getting it nearly fast enough.”

On the topic of regulation, Hinton proposed that governments should ensure significant resources are dedicated to AI safety. He also suggested innovative approaches to combat misinformation, such as inoculating the public against fake videos through exposure to less harmful examples.

“I think there’s a bunch of philanthropic billionaires out there,” Hinton suggested. “They should spend their money […] putting on the airwaves a month or so before these elections lots of fake videos that are very convincing. At the end, they say, ‘This was fake,’ […] and then you’ll make people suspicious of more or less everything.”

In closing, Hinton reflected on the evolution of AI and its implications for understanding the human brain. He noted that AI models like those developed using backpropagation provide valuable insights into human cognition, bridging the gap between psychological theories and computational models.

“The origin of these language models using back prop to predict the next word was not to make a good technology; it was to try and understand how people do it,” Hinton concluded. “So I think the way in which people understand language, the best model we have of that is these big AI models.”

Register here

Register here