At the 2025 AI for Good Global Summit, Qizhen Weng, Director of the AI Infrastructure Research Center at China Telecom, presented a comprehensive overview of the company’s forward-looking initiative: AI Flow. Positioned at the intersection of AI and communications infrastructure, AI Flow aims to enable ubiquitous intelligence by bridging the gap between devices, edge computing, and the cloud. Weng’s keynote laid out China Telecom’s foundational advantages, its recent institutional developments, and the company’s vision for a future where intelligence flows freely across systems.

Building the foundation for AI Flow

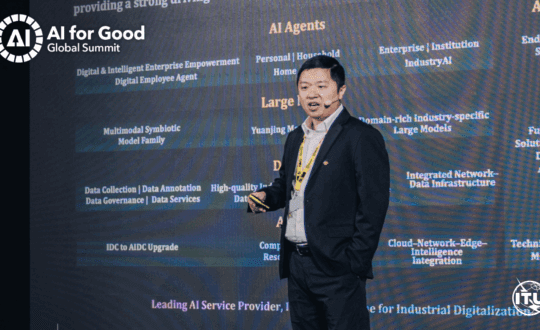

Weng began by outlining five core strengths that underpin China Telecom’s AI Flow vision. First is the company’s intelligent cloud infrastructure, with over 22 EFLOPS of computational power and multiple clusters comprising more than 10,000 accelerators distributed across the country. This full-stack AI capability supports large model training and inference on a national scale.

Second is the development of a trusted data platform. By combining privacy-preserving computation and blockchain technologies, China Telecom has built a secure data exchange system over high-speed networks that enables cross-industrial data sharing.

Third is the TeleAI Foundation Model, developed using the company’s national intelligent cloud and big data platforms.

“We’ve launched the TeleAI large foundation model including 7 billion, 12 billion up to 115 billion parameters, which is also the first large language model open sourced by a state-owned enterprise,” Weng said.

The fourth strength lies in multiple internal applications. With the support of TeleAI models, China Telecom is deploying AI across its own operations. One example is an energy-saving model that improves the efficiency of the AI infrastructure through predictive analytics and automated decision-making.

Finally, China Telecom offers external industry-specific solutions built on the foundation model. These include custom models for education, healthcare, and manufacturing. Weng shared that the ICT model developed for small and medium-sized enterprises has already been adopted by over 2,000 companies.

In 2024, the company formalized its AI ambitions by launching Institute of Artificial Intelligence (TeleAI). Officially unveiled in May under the leadership of Professor Xuelong Li, the institute’s mission is to explore the frontiers of AI while advancing applications that support social development.

What is AI Flow?

The concept of AI Flow emerged as part of TeleAI’s research agenda.

“AI Flow represents a fundamental breakthrough in the next generation intelligent networking,” Weng explained.

The idea is to reimagine how AI and communications networks can work together to enable seamless intelligence.

At its core, AI Flow seeks to build systems that are more inclusive, equitable, and sustainable. It enables the integration of AI with communications technology to create smarter, more efficient systems that benefit both individuals and industries. By bridging the gap between information technology and communications technology, AI Flow supports cloudification and digital transformation.

The goal is to support the development of three “spatial economies”: cyberspace, visible earth space, and wide-area space. According to Weng, AI Flow redefines the boundary of human-machine interaction and opens new possibilities for cognition and collaboration.

Watch the full session:

The three principles of AI Flow

The first pillar of AI Flow is device-edge-cloud collaboration. In this system, low-end devices, edge servers, and cloud centers work together to provide a flexible and resilient infrastructure for AI applications. Depending on network stability, devices can shift between local, edge, or cloud-based inference.

Weng offered a practical example. In ideal conditions, high-performance models in the cloud can be used for complex tasks. If the network is unstable, edge computing steps in to handle intermediate processing, ensuring service continuity. And in the absence of any connection, local lightweight models enable simple tasks to be processed directly on the device.

This collaborative structure also supports embodied AI systems. In a video demonstration, a humanoid robot controlled by independent modules in the limbs executed complex actions like carrying objects and using tools. The system relied on a private 5G network and an edge-deployed AI model to meet the strict latency requirement of under 20 milliseconds.

“This approach dramatically reduces the data transmission delay and enabling reliable and stable robot operation,” Weng explained.

The second principle of AI Flow involves familial models: groups of models with shared architectures and aligned hidden features that enable smooth collaboration across devices. These models can flexibly switch configurations depending on hardware constraints, using techniques like early exit or weight decomposition.

Weng contrasted familial models with traditional approaches, explaining that the latter typically combine independent models in a way that often leads to redundancy and limited flexibility. In contrast, familial models can be distributed across smartphones, edge servers, and cloud centers, forming an efficient inference network.

One example is the smart guide dog. A lightweight model on the device guides users through simple environments. When a complex situation arises, such as encountering obstacles or moving from indoors to outdoors, the system forwards the partial results to a larger cloud model. The cloud then completes deeper inference based on those inputs.

This synergy demonstrates how different-sized models can work together without duplication, maintaining both efficiency and reliability.

The third key idea is collaborative intelligence, a shift from data-driven emergence to interaction-driven intelligence. Weng pointed out a looming bottleneck in AI development: “In the near future, the generation speed of high-quality data used for training will far be behind the consumption speed of AI training.”

To address this, AI Flow proposes a new model where intelligence emerges from interaction among multiple agents and their environment. Rather than depending solely on massive training datasets, systems draw on shared knowledge, real-time interaction, and collaboration.

To illustrate this, Weng presented a multi-agent system featuring drones, robotic dogs, and robotic arms. In the demonstration, a voice command triggered a sequence of coordinated tasks: drones detected obstacles, robotic dogs opened doors, and robotic arms retrieved food.

“This demonstrates the potential of multi-agent system to perform complex coordinated task efficiently in real world environment,” Weng said.

Looking ahead

China Telecom envisions AI Flow as a foundation for future AI applications that are responsive, distributed, and seamlessly integrated into users’ lives. By combining familial models with device-edge-cloud architecture, and fostering intelligent collaboration, AI Flow aims to unlock the free flow of intelligence.

“In the future, we hope that the intelligence generated by this connectivity will unlock the free flow of intelligence,” Weng concluded.