As a United Nations agency with a specialized focus on digital technologies, the International Telecommunication Union (ITU) strives to align new and emerging tech with global sustainable development goals.

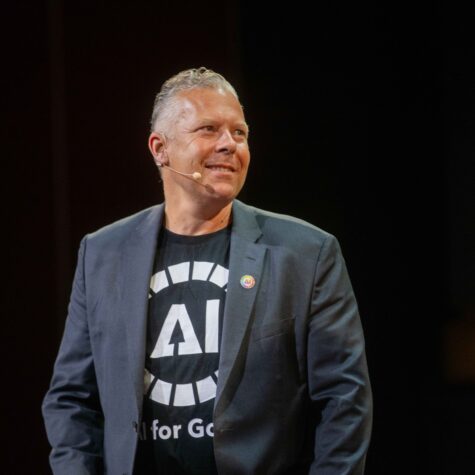

Through the AI for Good platform, we connect artificial intelligence (AI) innovators with what we call problem owners and help them create a common language of understanding to connect and work on solutions.

Making those connections and generating opportunities for collaboration is going to be one of the most important elements for scaling the AI for Good promise.

When COVID-19 hit, we had to reinvent ourselves.

From a yearly physical event in Geneva, Switzerland, we have become a virtual channel – always available all year long. We’ve built a 60,000-strong community from over 170 countries.

Participation from developing countries has more than doubled – and gender balance has improved as well.

Five years on, AI for Good is no longer a summit. This transition to action-driven collaboration has made us a year-round digital platform where AI enthusiasts come to learn, connect, and build.

We are setting up an AI-powered community matchmaking platform, where users get smart recommendations on AI for Good content spanning the last five years and can be matched with people with shared areas of expertise and action.

AI for Good complements pre-standardization work by ITU Focus Groups looking at AI in environmental, energy, autonomous driving and road safety, health, agriculture and other applications.

At our Innovation Factory, start-ups pitch AI innovations that contribute to the UN Sustainable Development Goals for 2030.

We issue problem statements to fix bottlenecks that could hinder AI at scale. Our challenge for AI and machine learning (ML) in 5G brings professionals and students together to compete in solving problems like how a windy or hot day affects 5G network transmission.

The maturing AI narrative

From hype and fear around AI, we now see a maturity in the narrative – with a focus on removing obstacles and building frameworks to deliver the benefits of AI responsibly. But our work is far from finished. What people want to achieve collectively must inform and shape AI, instead of AI shaping us.

Since we launched the AI for Good summit in 2017, we have witnessed several breakthroughs in the field.

From AlphaGo beating the Go world champion and now coaching top Go players, to DeepMind’s AlphaFold, which solved a 50-year-old protein folding problem, to the GPT-3 algorithm generating increasingly convincing stories, AI applications have grown exponentially.

While such advancements are exciting, too much AI talent and money are channelled into building profiles and figuring out consumer preferences. I remember Jim Hagemann Snabe, current Chairman of Siemens and Maersk, suggesting at the summit a few years ago that AI powered social media ads were perhaps not the smartest way of using AI.

But the Sustainable Development Goals agreed upon by 193 UN member states offer a more constructive path for AI.

AI and ML can predict early onset of Alzheimer’s and help curb the 10 million cases of dementia that occur every year. They can help to predict and mitigate natural disasters that claim 1.3 million lives each year. Computer vision on mobile phones can flag skin cancer and allow farmers to identify plant diseases. AI can help to detect financial fraud and fake news. We can even use algorithms to preserve endangered languages.

But even with so many positive use cases for AI, there is a need for caution. A study in Nature found that AI could enable 134 SDG-related targets, but could also inhibit 59 such targets.

Balancing scale with safety

One of the bottlenecks in using AI for good is figuring out how to scale those solutions, especially to places that need them the most. A solution developed in Silicon Valley, in Shenzhen, or at a well-funded university may not work smoothly on the ground in a low resource setting where it’s meant to be deployed.

AI growth often stokes fear, such as of mass job losses. By the mid-2030s one-third of all jobs could face the risk of being automated, according to a PwC report. But millions of new jobs could be created by AI adoption as well. How do we manage that bumpy transition?

Automation raises safety concerns. As the saying goes: “Anything that can be hacked will be.”

Even putting a few stickers on a stop sign could confuse an autonomous car. Cybersecurity is crucial in a world of connected devices. Safety and security are vital to build public trust in AI solutions.

Questions of ethics and liability also come into play. In the classic “trolley problem”, one must choose if a runaway trolley hits a group of people straight ahead or swerves onto another track, endangering the life of just one person.

Autonomous driving researchers have updated the thought experiment to the “Molly problem.” How should a self-driving car accident be reported without witnesses? One ITU focus group is preparing a framework for building trust in AI on roads.

Making data work

AI cannot work without data. Humans are biased by nature, meaning the data we collect is also biased. Data will never be perfect, but we must strive to ensure the data that feeds algorithms is as unbiased as possible. That means building inclusive datasets that work equally well for all genders, ages, skin colours and economic backgrounds.

As we strive to build collaborative solutions through the AI for Good platform, we see the need for unbiased, anonymous data-pooling frameworks.

Whenever we ask who has data, almost everyone in the room raises a hand. But when we ask who’s willing to share or donate it, no-one responds. This all comes down to the conditions by which data is shared.

There are techniques to handle data in a way where it’s useful for AI but also respectful of anonymity and privacy. In federated learning, for instance, data is processed “on the edge,” never leaving the initial device. Homomorphic encryption allows analysis without the underlying data ever being revealed.

Leaving no one behind

As AI proliferates, there is a risk of deepening the digital divide. Developing countries potentially have the most to gain from AI and but also the most to lose if the building blocks of mass digitization and connectivity are not in place.

AI for Good is more than a summit and brings as many people to the table as possible – from technology companies and government agencies to academics, civil society, artists, and young people.

It has formed partnerships with 38 UN agencies.

Together, we explore practical, deployable solutions and aimed at tackling the biggest challenges confronting humanity.