At the AI for Good Global Summit, the World Internet Conference (WIC) convened a workshop on developing responsible AI, moderated by Xueli Zhang, Deputy Secretary General of WIC. The session brought together experts from international organizations, academia, industry, and United Nations agencies to address both the vision and practice of responsible AI. The discussions highlighted the urgency of ethical governance, the complexity of global coordination, and the real-world experiences shaping how AI can be developed and deployed responsibly.

Setting the stage for responsible AI

Francis Gurry, Vice Chairman of the WIC and former Director General of the World Intellectual Property Organization, opened with a historical perspective on society’s fascination and fear of new technologies. From Mary Shelley’s Frankenstein to modern debates around AI, he noted that alarm about “the monster in the machine” has long shaped public imagination. He warned, however, that excessive fear can become a barrier to beneficial deployment. Governance remains fragmented, with approaches ranging from hard law to self-regulation. What is lacking, he said, is a coordinated international framework:

“It’s very important for the success of AI as well as for public safety, confidence and social cohesion that we have the means to provide assurance to the general public that AI is being and will be deployed in a responsible manner,” Gurry stated.

Global visions for governance and ethics

Liang Hao, Executive Deputy Secretary-General of WIC, described how AI is advancing rapidly in both capability and scope, from large models generating text and images to exoskeleton robots supporting rehabilitation and tourism. Alongside these benefits, risks such as data breaches, deepfakes, misinformation, and energy consumption are intensifying. He emphasized the widening “intelligence divide” that compounds global inequalities in access to digital tools. WIC has responded through reports, summits, and the creation of a specialized AI committee to foster international cooperation.

“AI is transforming the digital divide into an intelligence divide,” Liang said, underscoring the urgency of bridging this gap through inclusive governance.

Frederic Werner, Chief of Strategy and Operations at AI for Good within the International Telecommunication Union, reflected on the evolution of the initiative since its founding in 2017. From a small gathering of a few hundred people, AI for Good has grown into a global platform with tens of thousands of members. He highlighted the importance of turning ethical principles into technical specifications through standards, a process that enables principles to become operational practices.

“Responsible AI cannot be developed in isolation,” Werner said, stressing the need for multistakeholder cooperation and global dialogue.

Wendy Hall, Regius Professor of Computer Science at the University of Southampton, brought a sharp focus to risks for the internet itself. She warned that generative AI could undermine trust online by producing false information at scale, threatening the integrity of the digital environment. She also drew attention to inequalities, both between regions and within institutions, calling for stronger inclusion of women in decision-making.

“If it’s not diverse, it’s not ethical,” Hall argued, linking diversity directly to ethical AI development.

Seán Ó hÉigeartaigh, Programme Director at the University of Cambridge’s Centre for the Future of Intelligence, echoed the concerns, describing AI governance as being in crisis. He noted that while companies are investing billions into advancing frontier AI, funding for independent safety and governance research lags far behind. Lobbying efforts, he added, have sought to weaken regulatory frameworks, leaving society vulnerable to the unchecked power of a few corporations.

“AI governance is, I believe, in crisis but every crisis is an opportunity to achieve change,” he said, pointing to the possibility of stronger international agreements.

Yi Zeng, Professor at the Chinese Academy of Sciences and Co-chair of WIC’s Specialized Committee on AI, emphasized the role of AI in sustainable development. He presented findings from international evaluations of AI governance, noting imbalances between countries and the need for broader preparedness. He also offered scientific evidence that safety improvements do not necessarily compromise performance.

“It is our duty to keep the AI models to be ethical and safe in the first place,” Yi concluded, framing safety as a first principle of responsible AI.

Watch the full session here

Practices and experiences in responsible AI

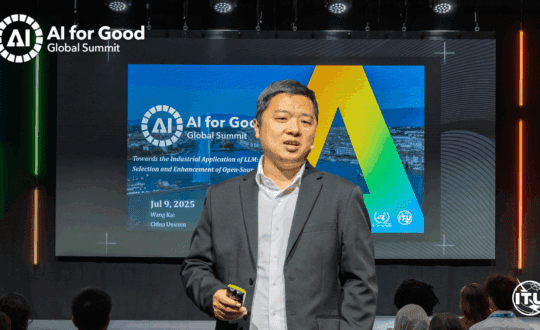

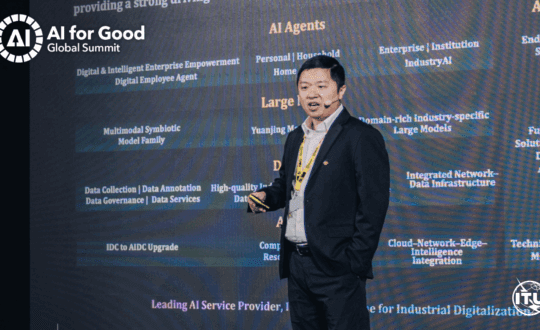

Kai Wei, Vice Chair of WIC’s Specialized Committee on AI and Director at the China Academy of Information and Communications Technology, shared insights from China’s industrial practices. He described the rapid evolution of large language models, significant improvements in benchmarks, and the rise of multimodal systems. With these advances come risks that are no longer hypothetical but visible in real-world cases, from scams using AI-generated voices to vulnerabilities in widely used open-source systems. He outlined China’s progress in standardization, industry self-regulation, and safety commitments. “AI risks are not just theoretical anymore,” Kai said, pointing to the urgency of embedding safeguards into practice.

A joint presentation by Moises Maldonado, Data Scientist and Innovation Officer at the UN High Commissioner for Refugees, and Liudmila Zavolokina, Professor at the University of Lausanne, showcased a humanitarian application. They examined how AI could support the targeting of cash assistance for asylum seekers in Mexico and Central America. The project balanced technological capability with ethical principles, ensuring that human oversight remained central. Their reflections emphasized the need to build systems from both directions – principles and technology – so that they meet in the middle responsibly.

“The journey towards responsible AI is about aligning AI and ethics into a responsible AI system,” Maldonado said.

“It is safest to build the tunnel from both sides,” Zavolokina added, using a metaphor for balancing ethics and functionality.

John Higgins, Co-lead of the Standards Promotion Program of SC on AI and Co-founder and Chair of the International AI Governance Association, highlighted how governance mechanisms must be practical, efficient, and integrated into organizations. He explained that sharing best practices across industries helps companies implement responsible approaches while also enabling access to global markets. Governance, he stressed, should be embedded in overall organizational structures rather than treated as an afterthought.

“Let’s work with the organizations that want to do the right thing and let’s help them put in place effective and efficient governance mechanisms,” Higgins said.

Other speakers contributed additional perspectives. Luo Zhong from ITU emphasized the importance of interoperability and technical cooperation, while Ebtesam Almazrouei described innovation projects that demonstrate responsible AI in practice. Chris Brown from the WIC Data Working Group highlighted data governance as a cornerstone of trustworthy systems. Together, these interventions reinforced the need for collaboration across technical, regulatory, and societal dimensions.

Toward a shared framework for responsible AI

Across the session, a common message emerged: responsible AI requires both vision and practice. From global governance debates to humanitarian applications, the workshop demonstrated the breadth of issues at stake. While challenges include corporate influence, fragmented regulations, and widening divides, there was consensus that cooperation and inclusivity are central to moving forward.

As Francis Gurry reminded participants at the outset, “It’s very important for the success of AI as well as for public safety, confidence and social cohesion that we have the means to provide assurance to the general public that AI is being and will be deployed in a responsible manner.”

The session showed that while the pathways are complex, the determination to align technology with human values is shared across sectors and regions.